SoundSieve: Seconds-Long Audio Event Recognition on Intermittently-Powered Systems

Abstract

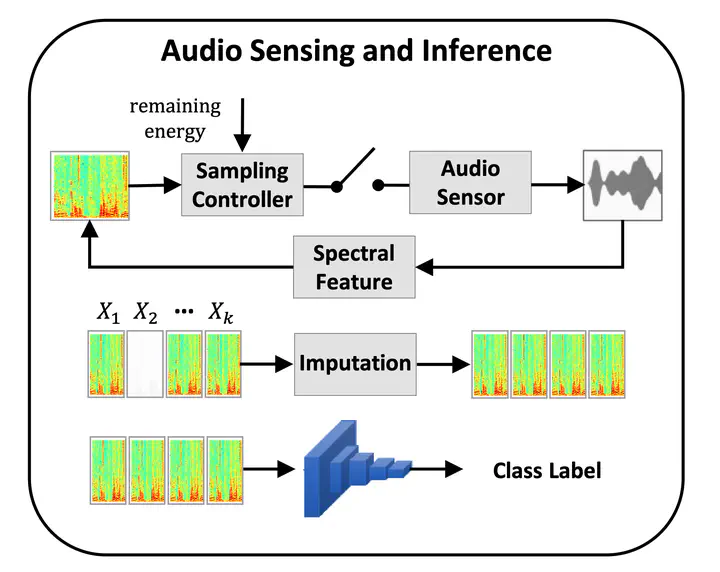

A fundamental problem of every intermittently-powered sensing system is that signals acquired by these systems over a longer period in time are also intermittent. As a consequence, these systems fail to capture parts of a longer-duration event that spans over multiple charge-discharge cycles of the capacitor that stores the harvested energy. From an application’s perspective, this is viewed as sporadic bursts of missing values in the input data – which may not be recoverable using statistical interpolation or imputation methods. In this paper, we study this problem in the light of an intermittent audio classification system and design an end-to-end system – SoundSieve – that is capable of accurately classifying audio events that span multiple on-off cycles of the intermittent system. SoundSieve employs an offline audio analyzer that learns to identify and predict important segments of an audio clip that must be sampled to ensure accurate classification of the audio. At runtime, SoundSieve employs a lightweight, energy- and content-aware audio sampler that decides when the system should wake up to capture the next chunk of audio; and a lightweight, intermittence-aware audio classifier that performs imputation and on-device inference. Through extensive evaluations using popular audio datasets as well as real systems, we demonstrate that SoundSieve yields 5%–30% more accurate inference results than the state-of-the-art.