Abstract

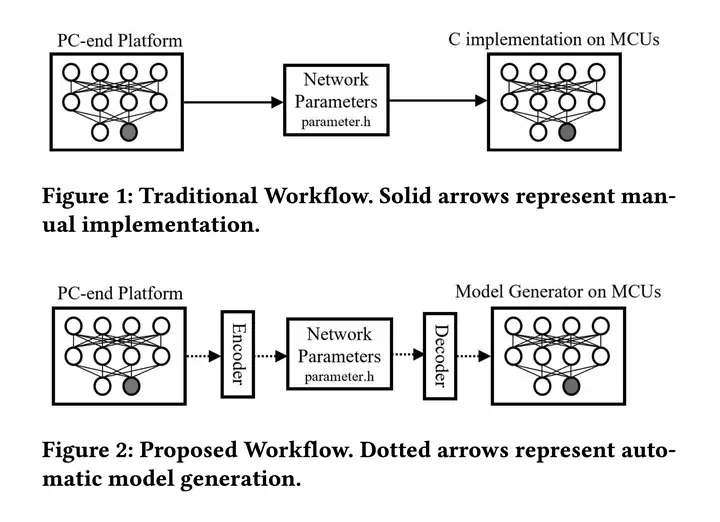

Resource-optimized deep neural networks (DNNs) nowadays run on microcontrollers to perform a wide variety of audio, image and sensor data classification tasks. Despite comprehensive support for deep learning tools for 32-bit microcontrollers, performing deep learning inferences on 16-bit microcontrollers still remains a chal-lenge. Although there are some tools for implementing neural net-works on 16-bit systems, generally, there is a large gap in efficiency between the development tools for 16-bit microcontrollers and 32-bit (or higher) systems. There is also a steep learning curve that discourages beginners inexperienced with microcontrollers and programming in C to develop efficient and effective deep learning models for 16-bit microcontrollers. To fill this gap, we have created a neural network model generator that (1) automatically transfers parameters of a pre-trained DNN or CNN model from commonly used frameworks to a 16-bit microcontroller, and (2) automatically implements the model on the microcontroller to perform on-device inference. The optimization of data transfer saves time and mini- mizes chances of error, and the automatic implementation reduces the complexity to implement DNNs and CNNs on ultra-low-power microcontrollers..